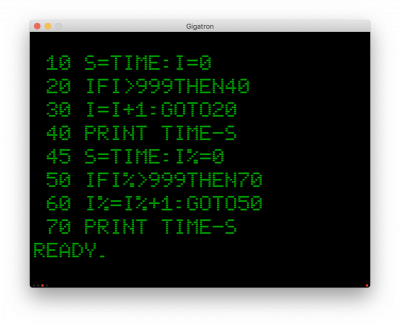

All these back-and-forth conversions make integer variables slower in MS BASIC. I can only see their point in large arrays. This is easy to test:

Attachment:

Screenshot 2019-10-24 at 17.32.12.png [ 344.83 KiB | Viewed 1906 times ]

Screenshot 2019-10-24 at 17.32.12.png [ 344.83 KiB | Viewed 1906 times ]

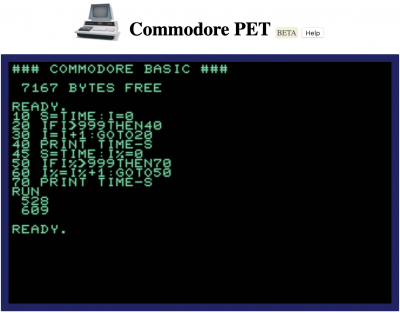

Result in video mode 3:

Attachment:

Screenshot 2019-10-24 at 17.19.38.png [ 317.22 KiB | Viewed 1906 times ]

Screenshot 2019-10-24 at 17.19.38.png [ 317.22 KiB | Viewed 1906 times ]

This is not a completely fair comparison because the GOTO20 is a bit faster than the GOTO50. But if you remove line 10-40, the integer loop still runs slower (3337 ticks).

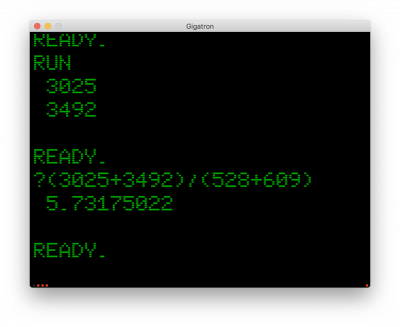

This now also gives us our apples-to-apples benchmark reference, without having to port another Pi program (we already

have one for TinyBASIC of course).

Attachment:

Screenshot 2019-10-24 at 17.22.53.png [ 304.29 KiB | Viewed 1906 times ]

Screenshot 2019-10-24 at 17.22.53.png [ 304.29 KiB | Viewed 1906 times ]

Hmm, I wonder how we can compute their ratio? Oh wait, there's only one way that's appropriate:

Attachment:

Screenshot 2019-10-24 at 17.28.55.png [ 320.31 KiB | Viewed 1906 times ]

Screenshot 2019-10-24 at 17.28.55.png [ 320.31 KiB | Viewed 1906 times ]

Wow: non-trivial machine code v6502 is

only 5.7 times slower than the real thing?! Perhaps that's too good to be true,

Edit: but confirmed by a stopwatch. This debunks the "v6502 is 10 times slower than the original 6502"-myth, and refines our earlier "8x slowdown" guesstimate. In reality we're running very close to 75 KIPS, or 3 instructions per scanline. Sweet